-

Building the pipeline

Nov 19 ⎯ I wrote down my hiring playbook and it turned out to become a book. I decided to split it in the following 3 chapters: The Talent Machine: A predictable recruiting playbook for technical roles. Building the pipeline: A sales-driven process for hiring. How to scale hiring: Hire hundreds of engineers without dying In the previous article, I argued that recruiting is just a sales process. And like any good sales process, it starts with a great product: an exceptional place to work. Now it’s time to hit the streets and start selling. To do so, let's first understand how a sales process works: The hiring pipelineLead generation: Identify your ICP (Ideal Candidate Profile) and start collecting leads. Qualification: Qualify the leads by checking if they match your ICP. Discovery: Understand their needs and pain points. Demo: Show them your product and how it can solve their problems. Objection Handling: Address concerns. Closing: Close the deal. Most sales processes follow a similar flow, and hiring works much the same way. The key difference is that Objection Handling in hiring cuts both ways: you’re also assessing whether the candidate is a good fit. You don’t often hear a salesperson say, “Sorry, we don’t think you’d make a good customer.” I’ll explain how to handle each of these stages. But beware, what follows is very opinionated. If it wasn’t, you wouldn’t need to read this article, just ask ChatGPT. Lead generation This is the most painful step of the hiring process. It's soul-crushing work, but it's the only way I know to grow your team. There are several ways to generate leads, each with its pros and cons. You’ll start with outbound, but as you grow, you’ll be do more inbound and referral channels. Lead generation: Outbound Outbound means proactively reaching out to people. This is the first channel you have to master since, at the beginning, no one knows who you are. Posting jobs will only get you bots or low-quality candidates, so get good at finding and contacting people yourself. A man throwing a fishing netYour main tools will be LinkedIn, GitHub, and Twitter/Bluesky. You need to develop good heuristics to identify the right candidates efficiently. Spend a couple of hours every day sourcing candidates. You should find at least 5 per hour, so that's around 50 per week. With time, you’ll discover ways to improve that number. Some tips are: Use advanced search filters on GitHub. Be creative. Find companies with your stack on LinkedIn and located in your target market. Each company has a list of employees, so you can reach out to them. This is called poaching, and you have to do it delicately. The ecosystem will resent you if you raid a company going through a tough time. And the ecosystem matter, the more benevolent you are seen, the easier things will get in the future. So, allow yourself to be slightly evil, but never an asshole. Use Twitter/Bluesky search to find candidates discussing topics or tools relevant to you. Go to conferences and meetups and collect people’s contact information. Discord? A great dev.to article? A Hacker News post? Reddit threads? There are candidates everywhere. I even contact people writing code on their laptops at local cafés. The ultimate outbound tip: look in unexpected places. Everyone targets the same talent pool: engineers from startups or big tech. There’s no alpha there. If you stay creative and open-minded, you’ll find hidden gems for a fraction of the cost. Some of the best engineers I’ve met were underrated and underpaid. If you’re remote, explore countries with a lower cost of living but strong developer communities. If not, look at agencies or game studios that work under tough deadlines for much less than cushy startups pay. It’s essential to use some kind of CRM to track all your leads and the companies you’re sourcing from. You can use an ATS (Applicant Tracking System), a simple spreadsheet or a no-code tool. I did most of my recruiting with Airtable, and it worked fine. Some information you want to collect at this stage is: Name: Real or nickname, mostly to personalize the message. Email: Often available on GitHub; there are tools to extract them automatically but don’t spam anyone. A respectful email works far better than a LinkedIn message. Positions: Which roles they might fit. Created at: How long they’ve been active on your CRM. Company: Where they currently work. Why: Why you liked this candidate and why they might be a good fit. That’s a very important field, since you will likely separate sourcing from contacting, and you will need this information on the cold email. Current job start date: Avoid contacting people who recently changed jobs (2+ years is a perfect threshold), but keep them on file for later. Stage: Start with “lead” and later move them to different stages. Source: For now, “outbound.” Later, you’ll identify which channels work best. Assigned to: Probably yourself, but as you grow, ensure each candidate speaks to only one person from your company. Persist this data. You’ll need it to understand what works and what doesn’t. While I don’t advocate for purely data-driven hiring (too high variance), having a reality check is always helpful. Also, as you scale, more people will join the process, and candidates will pass through multiple hands. Lead generation: Referrals The second most important channel is referrals. It’s low-volume but extremely efficient. You already know more about the candidate, and convincing them is much easier. There’s always debate about whether referrals should be incentivized. On one hand, people take social risk when convincing friends to join. The better your company is, the lower that risk and people who love their job will naturally tell their friends about it. On the other hand, you don’t want to encourage people to bring in unfit candidates just for the money. What worked for me was mild compensation. It’s a “thank you,” not a financial incentive. Enough to show appreciation, but not enough to motivate people extrinsically. Lead generation: Inbound Inbound is what most companies overspend on: job posts, ads, and hope. It’s inefficient, especially early on. Without brand recognition, the only thing you can do to attract candidates with Inbound is to throw money at it: buy job postings and hope for the best. Even then, you won't get the talent you want, just flood your pipeline with low quality candidates that will take away your precious time. Use that time to do Outbound instead. Still, having job posts on your site is useful. When you reach out to candidates, they’ll check your website and browse openings. Sometimes you’ll get lucky. Maybe someone heard you on a podcast or read an article and reaches out. Just don’t rely on it as your main hiring channel. Lead generation: Recruiters This is where I piss off a whole industry and burn some bridges. A person watching a bridge burnDon’t use external recruiters, If you do, hire them, mostly one. Companies spend absurd amounts on external recruiters, often 10–25% of a candidate’s salary. Their incentives are rarely aligned with yours. That said, you might eventually need recruiters: seasonal peaks, pivots, or roles you’ve never hired before. Just don’t rely on them too much. They’re like drugs and paid advertising: magic at first, but hard to party without them on the long run. If you do want to use recruiters, start with several in parallel (they’ll ask for exclusivity; negotiate that). Later, hire the one who performs best. One is enough. And they should report to you, not HR. Otherwise, you’ll have someone whose performance is judged by another department, and that’s a recipe for disaster. In my opinion, recruiters should only handle outbound sourcing. Some companies let them do first contacts or screenings, but that’s a mistake. The first call is crucial and should be done by a technical person. Engineers prefer speaking to peers, not recruiters who confuse Java with JavaScript. Screening is delicate, and we’ll discuss it later. Always have a technical person do it. Some companies give HR veto power in the final stage. Don’t do that. It’s your hiring process, and you must own it. For good and bad. If HR wants to get involved, sit with them and explain the process and the decision making criteria. Let them give input and help you improve the process, but if you don't have 100% control over it, they will slow things down and lower your chances of hiring the best candidates. Qualification Great, you now have leads and are ready to qualify them. This process differs slightly depending on the channel – let's delve into it (not written by an LLM, I'm just teasing you). Qualification: Outbound You’ve spent a week sourcing and now have 50 leads. Time to reach out. If possible, contact them via email. If not, try Twitter/Bluesky DMs, then LinkedIn. From most personal to most professional. Craft a banger message. The title should be direct, and the body should feel personal and unique for each candidate. a inbox in the forestMy go-to structure: Title: Simple, human, and direct. If you have a good title (CTO, VP of Engineering), show it off. Let them know you’re not a recruiter. What works best for me is something like: “Hi, I’m the [your title] of [company name], and I want you to join us.” Body Who you are and what the company does: A brief, clear intro and a pitch that works. Why you’re reaching out: Be genuine and flattering. Mention what caught your eye, their OSS project, experience, or work you admire. This is a personalized message and hopefully you had it saved on your CRM. Why you’re hiring: Explain why the role exists and its expected impact. Your product: Describe what working at your company feels like. If you sourced right, it will resonate. Link to your calendar: Invite them to chat. Make it sound casual, not like an interview. Link to your Employee Handbook: The mic drop. This document explains everything they need to know about working with you. Candidates who say “hell yeah” will save you time, and those who decline save even more. Writing well is important. You have 2 seconds to grab their attention, so you better get better at this. I recommend newsletters like marketing examples to get better at copy. A good message can get around a 15% response rate. From 50 sourced candidates, that’s about seven qualified leads per week, not bad! Qualification: Inbound & Referrals Less exciting but necessary. Define your criteria and process, then go through your ATS or CRM. Discard those who clearly don’t fit, and send the rest a calendar link. If someone isn’t right for a current role but could fit later, keep them. Build a future pipeline. Discovery & Demo I like to bundle discovery and demo into a single step: the first interview. First rule: don’t call it an interview. It’s a chat. If you’re meeting in person, have a coffee or beer, but not in the office. coffee and beerThis keeps it personal, not transactional. You want the candidate to open up. The goal is twofold: Understand who they are and what they want in life. Show them how your company fits that vision. That order matters. Gather information first to personalize your pitch. Are they aiming to be a CTO in five years? Great. Show them that path. Make them comfortable. For instance, I try to make candidates laugh in the first two minutes. Be clumsy, be human. Like Dr. Slump’s Senbei Norimaki, you can get serious later. Ask open ended questions and let them do the talk. When they open a box, dig deeper. When they don't, try mirroring (repeat what they last said). Me: So, how is work nowadays? Candidate: It's ok. <crickets, he is not going to expand... time to mirror> Me: It's ok? Candidate: Yeah. Good salary, and the manager is ok. Me: What do you mean the manager is ok? Candidate: You know, I had non-technical managers who just asked “how’s the project going?” I want someone technical who can help me grow. Me: Yeah, I've seen that too. How does he help you grow? What does grow even mean? This is great, now we are getting to an interesting place and the candidate is feeling more comfortable and willing to share. Mirroring works wonders. A mirrorDon’t rush to reject quiet candidates (some recruiters or HR people will). Some are bad at interviewing but brilliant at work. They’re undervalued by the market and often the best hires. For getting information out of interviews I highly recommend reading Never split the difference by Chris Voss. Yes, I know. The book is about negotiation with terrorists, but the principles are similar: building trust so the conversation can lead to a positive outcome for both parties. You can also sprinkle the conversation with some open ended questions that help complete the picture. Remember: you are not evaluating, you are discovering who you have in front of you. Experiment and write down what questions that work best for you. Here are some that I’ve used in the past: What's something you don't want to do? If you could write your perfect job description, what would it be? What makes you happy, angry, or sad? What rabbit holes have you chased recently? Why? If you didn’t have to work anymore, what would you do? The second part of the chat flips the roles: you talk, they listen. Be charming, be honest, and remember that not everyone will like you, and that’s fine. It’s a numbers game. The goal is for them to leave thinking, “Hell yeah, I want to join tomorrow.” Most companies get this backward. They drag candidates through hoops, then try to excite them at the end. You must excite them early because candidates never do your process alone. Once someone starts interviewing with you, they’re also interviewing elsewhere. If you win their excitement and move fast, you’ll likely win them and shorten the time to hire. That's the main difference between buying and selling. During this second half of the interview I recommend you to cover the following points: Why the company exist The product and the market The company’s story and your background Your financial situation. Be honest. This matters to a lot of engineers. Are you profitable? Say it loud, they will love that. Did you just raise a round? Mention it. Are you running out of money? I'm sorry about it, but also mention it. They will figure out sooner or later, and you would rather have them not join than leave in 1 week and waste your precious recruiting efforts. The team The culture Review together the Employee Handbook so there are no questions or surprises. Talk about the employee lifecycle, the company cadence and whatnot. If done correctly, the only thing left is for you to discover whether you want to work with them or not (buying). This means that salary is agreed at this point, not after the last interview. This is very important. There is nothing worse than spending countless hours to lose the candidate on the last step. And is not fair for the candidate either. Don't delay it. If you don’t like talking about money, rip it off. After all, you already know the price tag. A suitcase full of moneyIf the candidate is stronger or weaker than expected, adjust by offering a higher or lower position, not by haggling salary. People hate salary negotiation but are fine negotiating roles. Wrap up by thanking them and asking if they’d like to start the process (remember, this was not an interview). If you’ve done well, they’ll say, “Let’s fucking go.” Then schedule the technical interview. If your company is genuinely appealing, you should convert around 80% of these. From 7 qualified leads, you’ll get 5 interviews. If not, improve your pitch. Objection handling Now comes what you’re probably already doing: interviewing candidates. It’s time to figure out whether this person is really a good fit for the position or not. My advice is to keep it simple and short. That’s it. The rest is fluff. In my experience, and other companies confirm this, each interview has diminishing returns. During the first one, you get most of the information you need; in the following ones, not so much. Every extra interview decreases your chances of closing the candidate. Remember, you are competing against other companies, and whoever lands an offer first has an edge. False positives and negatives are inevitable, no matter how much you interview. If you believe that talent follows a fat-tailed distribution (I do), your strategy should be to minimize false negatives. The ones who get away. Passing on a future Jeff Dean is worse than hiring someone who doesn’t work out. Your team, like most investment portfolios, will end up following a Pareto distribution. A very small set of engineers will be responsible for the majority of the company's success. This doesn’t mean you should only hire staff+ engineers and ignore everyone else. It means you should evaluate candidates not for what they know but for what they can learn. Knowledge is easy, and getting easier by the day. But creative, curious minds are scarce. Unfortunately, most interviews still test knowledge. Candidates can easily master the interview process, the same way LLMs cheat by engulfing all benchmark data. What are you really testing by having a candidate solve an algorithm or data structure problem? That they’ve practiced “Mastering the Job Interview”? It’s as absurd as IQ testing, which mostly predicts how good someone is at taking IQ tests. People doing an examThe best interviews are conversational. They’re hard to fake, and if you ask the right questions, you’ll learn everything you need to learn in just one interview. If you don’t believe me, listen to how Tyler Cowen interviews people, and notice how they crumble under intellectual pressure. In these conversations, you can see how candidates reason, linearize complex thoughts, and connect or create ideas in real time. Tease them with humor. I’ve never seen an LLM make me laugh, and humor is a better proxy for intelligence than logic puzzles. When you’re in front of a brilliant mind, it’s a fantastic experience. But it’s not quantifiable: it’s an “I know it when I see it” kind of thing. There’s no scorecard for a great conversation, and your gut will be right about 90% of the time. That’s a fantastic error rate you won’t improve no matter how many tests you make the candidate endure. How is that interview structured? It's just talking? Isn't this "too easy"? Hold your horses! First of all, difficulty doesn’t lower error rates; it just builds exclusivity theater. And no, it's not just talking: You ask the candidate to bring code they've wrote. It can be an open source, a side project, or a take-home exercise they wrote for another company. At some point, I got to know most companies’ homework assignments in Barcelona. They weren’t very good. Candidates love this approach. As I mentioned earlier, they’re usually interviewing with several companies, trying to maximize their chances of success. Skipping the take-home exercise is a massive speed-bump for both sides. Assemble a team of 2 or 3 engineers to interview the candidate (yourself included if you are part of this hiring process). Usually 2 senior+ and 1 junior/mid that is mostly shadowing and learning how to interview. Spend 1 to 2 hours with each candidate. For manager roles or staff+ positions you might want to have a second interview to touch on different topics with more depth. If the code they bring is not that interesting, then just ask open ended questions. Those shouldn't have a correct answer, like most things in life. They need to be juicy and have different levels of depth, like most things in life(2). Some questions that I've used in the past: Role play. the CEO storms in, stressed because customers are complaining the app is down. What do you do? Whatever they say, dig deeper. If it’s a senior role, the root cause should be something gnarly: Postgres wraparound IDs, a rogue Bitcoin miner, or maybe a solar flare flipping a bit. You’re hired, and your first task is to add search. What do you do? This opens discussions about databases, synchronization, change data capture, and search algorithms. How would you architect a real-time game? Why is it different from a traditional SPA? This explores distributed systems and state synchronization. Tell me about a time where you made a technical decision that had a meaningful business impact. Oh boy, do they trip over this one. Tell me of your biggest fuckup. What did you learn from it? That’s it. You have a conversation, review the code, and follow your instinct. If you feel good about the candidate, make an offer. The % of people that pass this process will largely depend on your risk aversion and how well have you sourced and done the first interview. You’ll usually see 20–50% of candidates pass. Lower than that means too strict; higher means too lenient. But take those numbers with a grain of salt. The sample sizes are small, and you’ll have lucky and unlucky streaks. From the previous 5 candidates, this should leave you around 2 candidates to make an offer to. Getting there! Are you excited? Let's go! Closing The final step is to make an offer. You need a badass template ready with all the details and a deadline. A deadline? Don’t engineers hate deadlines? Yes, they do. But job offers without deadlines suck for everyone. Here’s how things usually go wrong: you send an offer, freeze the position because you don’t want to be an asshole, and the candidate goes silent. One week passes, then two, then three. Suddenly, they tell you they’ve accepted another offer from a slower process than yours. Bummer. You’re now three weeks behind schedule. Sure, you could keep interviewing candidates instead of freezing the position, but that’s even worse for the candidate, who might have already told their current employer they’re leaving. The solution is simple: set a deadline and be transparent about it. Tell them, “I’ll freeze this position for you for one week. After that, I’ll continue hiring for it.” It’s fair for both parties. Everyone has time to think and decide, and the consequences are clear. More importantly, a deadline creates urgency, and urgency triggers emotion. If you’ve done the first interview well, the candidate will be excited enough to overcome the fear of leaving their safe job or finishing other interview processes. Track how long it takes from first contact to offer. Your close rate is inversely proportional to your time to hire. A realistic, excellent time to offer is two weeks. The offer stage should have one of the highest close rates in the entire process since all details have already been discussed and agreed on. At this point, it’s just a final decision. A good close rate is around 80–90%. That means from your last two candidates, at least one will accept. Red balloon on the sky

-

The Talent Machine

Nov 12 ⎯ I wrote down my hiring playbook and it turned out to be massive. I decided to split it in the following 3 chapters: The Talent Machine: A predictable recruiting playbook for technical roles. Building the pipeline: A sales-driven process for hiring Hiring Scaling: Hire hundreds of engineers without dying The room is packed. Mostly men in their 30s, wearing swag t-shirts and jeans. I'm on stage giving it all, explaining how I helped build a unicorn in Spain, an unusual creature for the local fauna. I never rehearse my talks. Like a large language model, I generate one token at a time, hoping each will lead to something the audience wants to hear. When it works, it's magic and I feel like a genius. When it doesn't, I look like a complete idiot. A successful idiot, though, since I'm the one standing on stage. This time around, they nod, laugh, and even clap at the end. I delivered. "Any questions?" I ask. My hands get a bit sweaty but it’s always the same question, and I’m ready for it: "How do you hire engineers? It's so hard." Hiring Engineers is a sales job The best engineers don’t apply for jobs. They join people who make them believe. So stop waiting, and go sell. No one – I made it up There are two ways to approach hiring: Buying talent. Selling positions. They sound similar but they are actually the opposite. Companies with a buying mindset typically throw money at the problem. They hire a team of recruiters, pay for job board postings, and optimize for filtering. In contrast, companies with a selling mindset spend time defining roles as products and positioning them in the market. They proactively look for candidates and engage them in what is, essentially, just a sales process. The emphasis shifts from weeding out “bad” candidates to convincing great ones. For the rest of this article, I will outline my sales-based recruiting playbook, the one I used to scale a team to 150 engineers in 3 years with only one recruiter. What follows might not all apply to you, but you might learn a thing or two. Product and Positioning To find a unique position, you must ignore conventional logic. Conventional logic says you find your concept inside yourself or inside the product. Not true. What you must do is look inside the prospect’s mind. Al Ries – Positioning: The battle for your mind The first thing you need to decide is what product you can sell to candidates. This "product" is the overlap between what you need and what candidates want . Essentially, you're defining your value proposition as an employer and it will drive everything else you do. Ask yourself some key questions about the opportunity you're offering: Depth vs. Breadth: Is your engineering work deep (specialized, fewer engineers needed) or shallow (broad, many generalists needed)? Location: Where is your team based? Is it in a tech hub or a more remote location? Remote Work: What is your stance on remote or hybrid work? Diversity & Inclusion: How do you approach building a diverse and inclusive team? Work Culture: Do you prioritize work-life balance, or are you a fast-paced mission-driven culture? Mission & Impact: Do you have a powerful mission or compelling problem that will inspire candidates? Technology Stack: What does your tech stack look like? Modern and exciting, or stable and boring (be honest)? Reputation: Are you a known leader in your market? Founder/Team: Who are the people behind the company and why are they exciting to work with? Compensation: How much can you realistically pay (both salary and other benefits)? Each of these questions helps define the market you're addressing and sets realistic expectations for your talent pool. Be brutally honest here about what you can offer and where you fall short. If your product is a B2B tool for accountants on a COBOL codebase and you're based in Tbilisi (not exactly a tech magnet), you might need to offer remote work or other perks to expand your reach. On the other hand, if you're curing cancer, working a 4-day week or pay the highest salaries, you can probably get away with murder (figuratively speaking). People on suits looking at a mainframeAs tempting as it is, don’t chase the largest talent pool for the sake of it. Everyone else already does. Instead, look for under-served pockets of talent, groups that match your company’s values or needs but aren’t being courted aggressively. For example, you could default to mainstream stacks like JavaScript or Python because they have the most developers. But that also means more companies looking for the same candidates. Choosing a more niche technology (say Elixir or Clojure) can narrow the pool but also the competition. You’ll find engineers who are more passionate, loyal, and easier to hire because fewer companies are after them. At Factorial, the company I founded, I applied the same idea. Most startups were obsessed with hiring 20-something engineers who’d stay late playing ping-pong and drinking beers. I was in my 30s, starting a family, and didn’t want that lifestyle anymore, so I turned it into a hiring advantage. I targeted experienced engineers in their 30s and 40s who wanted stability, flexibility, and purpose. All my messaging, from the cold emails to the interviews would revolve about that positioning, and it paid off: we attracted senior talent early and built a mature, highly productive team very fast. beer bottles on top of a ping pong tableOnce you understand what you can sell and who your market is, write it all down in a Employee Handbook. This is a public doc you’ll share with candidates. It should clearly articulate everything about working at your company. The goal is for the right people to read it and think, "Yeah, this company is for me." Conversely, if someone reads it and thinks "Nope, not my vibe", that's actually good: you've just saved everyone’s time by filtering out a poor fit early. Compensation One of the most important parts of positioning your "product" is pricing it correctly. And correctly means setting compensation such that you can attract the talent you need without running out of money. Engineers will likely be one of the biggest expenses for your company, so you must understand how many engineers you actually need and how much you can afford to pay them. If you're a bootstrapped company, you will know. But if you're VC-funded, be careful: $5M in the bank might look like infinite runway, but at $150k per engineer, that's only 11 engineers for 3 years. Once you are confident with the numbers, I highly recommend publishing the salary ranges for each role in your Employee Handbook. In fact, I go a step further: Fixed salaries per level, not ranges. Create a dual-track ladder (one for individual contributors, one for managers) with clear levels and a fixed salary at each level, alongside a rubric of what each level means. But wait, wasn’t the Employee Handbook public? Yes! Having a public document that you can share publicly massively increases your chances of hiring top talent. Candidates will often find a public salary table more fair, even if your numbers are a bit lower than what they could get elsewhere, simply because it's transparent and applies to everyone. Engineers, raised in open-source culture, thrive on transparency and clarity. If you are not clear, or hide information, they will assume you are just bullshitting. A bull, a pile of shit and a detectorFixed salaries (no negotiation, no ranges) are a bit unconventional, but in my experience it’s a net positive: No Negotiation Dance: Ranges invite negotiation, and many engineers dislike the salary negotiation process. They feel dirty talking about money, like a mercenary instead of a craftsman. By offering a fixed number, you spare candidates the stress and uncertainty. Internal Equity: Ranges create pay disparities among peers that can breed resentment. If two engineers perform the same role but one managed to haggle for $5K more, you'll eventually have tension (especially as teams grow and people talk). Perceived Fairness: A single number feels less arbitrary than a range. Candidates know exactly where they stand and what the offer is, which many find refreshingly predictable and fair. Simplicity: It streamlines the process. You can discuss comp very early and easily (more on that later) since there's a single figure on the table. Downsides? Sure, a fixed scheme means you might lose a great candidate who has a higher expectation for that level, since you’re not negotiating. That lack of flexibility can seem like a disadvantage. However, I view it differently: if your fixed salaries are truly too low to close good candidates, the answer is not ad-hoc negotiation, it's to raise your salary bands for everyone. In other words, if you’re consistently losing hires due to comp, adjust the fixed numbers upward for that role. But if you’ve done your homework on market rates and you're paying enough (you should!), then money usually won’t be the problem. By removing room for negotiation, you also remove excuses like "we have to pay more to close this hire," which forces everyone to focus on the non-monetary value your company offers. The “trimodal” nature of software Engineering Salaries Software engineering compensation has splintered into three distinct tiers in many markets. Tier 1 represents local companies or startups paying local-market rates, Tier 2 includes well-funded or mid-size companies paying above local averages, and Tier 3 is Big Tech and top-tier firms paying globally competitive packages. Gergely Orosz – The Pragmatic Engineer A few years ago, Gergely Orosz famously described how software engineer salaries in Europe had split into a trimodal distribution. They are no longer one bell curve, but three separate peaks for three categories of companies. Tier 1: Companies that pay around local market average (often non-tech companies or smaller startups). In the Netherlands, this was something like €50-75K for a senior. Tier 2: Companies that pay above local average, competing for talent in-region (e.g. well-funded scale-ups, certain mid-sized tech companies). Senior engineer comp might be €75-125K in that. Tier 3: Big Tech and international firms that pay globally competitive rates, often 2-3x what Tier 1 offers (senior packages €125-250K+ in Europe). These are the Googles, Facebooks, Ubers, high-frequency trading firms, etc., who benchmark pay worldwide. Why does this matter in our context? It’s useful for calibrating your hiring strategy. If you're a Tier 3 company (printing money or have SoftBank on your board), you can chase top 1% (p99) talent with huge offers. But most of us are not in that position. And frankly, if you’re targeting the top 10% (p90) engineers, it doesn’t really matter whether they come from a Tier 1 or Tier 2 company. In my anecdotal experience (don’t be offended, this is just my observation), Tier 3 companies do have some of the absolute best engineers, but on average have the same talent density as the other Tiers. Their sheer size and attractive brand mean they hire a lot of people, not all of whom are geniuses. Talent distribution over salary tiersSo unless you’re able to back a truck of cash up to someone's house, your chances of poaching top talent from Google/Facebook/etc. are rather slim. You’re better off finding excellent engineers in Tier 1 and Tier 2 companies who are perhaps undervalued or looking for a change. Many of the most skilled engineers I’ve hired were “undiscovered” talent at lesser-known companies. Be open-minded and look where no one else is looking. Always be searching for alpha in the hiring market. Employee lifecycle Lastly, as part of your product, you should document the employee lifecycle at your company. From start, to finish. A person considering joining you wants to know what to expect and they will love the transparency effort. In your Employee Handbook, cover topics such as: Hiring process: How many interviews will there be, and who conducts them? What skills or qualities are you evaluating at each step? Post-interview: What happens after the interviews? How quickly can candidates expect feedback or a decision? Onboarding: What does the first week/30 days/90 days look like for a new hire? What support do you provide to get them up to speed? Career progression: How do promotions work at your company? What are the expectations for each level or title, and how can one progress? (This often ties into that dual-track framework you created for salaries.) Cadence and culture: What is the rhythm of work? For example, describe the typical company cadence: yearly planning, quarterly goals, monthly all-hands, weekly team rituals, daily stand-ups, etc. Give candidates a sense of the day-to-day and the overall tempo. Writing all this out for the first time can feel overwhelming. Don’t worry. It doesn’t have to be perfect. The Employee Handbook is a living document and you'll update it as you learn and grow. But trust me, the upfront effort is worth it. All these details and clarity will save you so much time in the long run. Hiring (and then potentially losing people due to wrong expectations) is far more time-consuming and costly than writing a document. Look for inspiration and read how other companies are doing things. Steal, copy, modify, twist and shout. And do it again, until you find your message and voice. Here are some examples and resources: List of public career frameworks List of company handbooks That’s all for today. The next article is massive, and it explains in excruciating detail the whole recruiting pipeline. Please subscribe to get updates on your email!

-

Antimemetics

Sep 16 ⎯ One of Charlie Munger’s most powerful mental models is to “Invert, always invert.” This book does exactly that by exploring the antithesis of memes: antimemes. These are ideas that, instead of spreading easily, fail to be retained. Individually or collectively. I’m only halfway through this book, but I can already recommend it. It’s packed with fascinating concepts that make your brain bubble and sizzle. It touches topics that feel very close to fika: building an alternative to the hostile and chaotic meme overloaded internet. xkcd tar bombIn a world where the Wario/Mario dichotomy exists, I can’t help but feel it was a missed opportunity to call them Wemes. But that’s not on the author: the name comes from the novel There Is No Antimemetics Division.

- book

- antimemetics

-

Can it run Doom?

Sep 12 ⎯ One of my favorite activities with my kids is visiting science museums, especially the interactive ones. Pull a lever, twist a knob, press a button, and watch the effects of gravity, light, or fluid dynamics come to life. It’s so much better than just reading about it! Content creators have recently started applying the same approach to long-form articles. Some concepts are simply better explained through experimentation. It’s more engaging and rewarding for the reader. Unfortunately, most publishing platforms don’t make it easy to create this kind of content. Right now, it’s mostly developers who have access to it. I want to change that by introducing a new feature at fika: Snippets. Snippets let you easily embed interactive elements within your article. For now, you’ll need to code the Snippets yourself or rely on your LLM of choice. I may integrate this into the product later, but let me show you how it works with an example. Imagine I want to represent a double pendulum and it’s chaotic nature. I will prompt in ChatGPT. Create an html document with a simulation of a double pendulum that leaves a trail of their edge. Transparent background, 400px height. The pendulum is black and the traces are pastel blue Once it’s done, click on copy code and then I paste it inside an article snippet. It looks like this: Beautiful! I’m a developer myself, but I don’t know the physics of pendulums by heart. So once we’ve demonstrated that you can add Snippets to your articles, the next question is whether the title of this article is just clickbait, or if it actually delivers. Can it run Doom? Yes of course!

- fika

-

Local-first search

Jul 31 ⎯ I hear a lot of people are debating whether to adopt a local‑first architecture. This approach is often marketed as a near-magical solution that gives users the best of both worlds: cloud-like collaboration with zero latency and offline capability. Advocates even claim it can improve developer experience (DX) by simplifying state handling and reducing server costs. After two years of building applications in this paradigm, however, I’ve found the reality is more nuanced. Local-first applications do have major benefits for users but I think the DX claims fall off a cliff as your application grows in complexity or volume of data. In other words, the more state your app manages, the harder your life as a developer becomes. DX differences between local-first and cloud-based applicationsOne area I struggled with the most was implementing full-text search for my Fika, my local-first app that I used to write this very same blog post. Now that I’m finally happy with the solution, I want to share the journey with y’all to illustrate how local-first ideals can be at odds with practical constraints. Search at Fika Fika is a local-first web application built with Replicache (for syncing), Postgres (as authoritative database), and Cloudflare Workers (as the server runtime). It’s a platform for content curators, and it has three types of entities that need to be searchable: stories, feeds, and blog posts. A power user can easily have ~10k of those entities, and each entity can contain up to ~10k characters of text. In other words, we’re dealing with on the order of 100 million characters of text that might need to be searched locally. To deliver a good search experience for Fika’s users, I had a few specific requirements in mind: Good results: This sounds obvious, but many search solutions don’t actually deliver relevant results. In information retrieval terms, I wanted to maximize Recall@k, roughly, the fraction of relevant documents that appear in the top k results. Fuzziness: We don’t always remember the exact word we’re looking for. Was it “index” or “indexing”? Does décérébré really have 5 accents? The search needs to tolerate small differences in spelling/word forms. Techniques like stemming, lemmatization, or more generally typo-tolerance (a form of fuzzy search) help ensure that minor mismatches don’t result in zero hits. Highlighting: A good search UI should make it obvious why a result matched the query. Showing the matching keywords in context (highlighted in the result snippet) helps users understand why a given item is in the results. Hybrid search: This is a fancy term for combining traditional keyword search with vector-based semantic search. In a hybrid approach, the search engine can return results that either literally match the query terms or are semantically related via embeddings. The goal is to get the precision of keyword search (sparse) plus the recall of semantic search (dense). Local-first: Search is one of the main features of Fika, and it needs to work reliably and fast under any network condition. Other excellent bookmark managers like Raindrop are struggling to support offline, since they are based on a cloud-based architecture. This is a major differentiation point for Fika. With these goals in mind, I iteratively tried several implementations. The journey took me from a purely server-based approach to a fully local solution. Let’s dive in! First attempt: Postgres is awesome ok My first attempt was decidedly not local-first: I just wanted a baseline server-side implementation. I drank the Kool-Aid of “Postgres can do everything out of the box” and implemented hybrid search directly in Postgres. The idea was seductive: if I could make Postgres handle full-text indexing and vector similarity, I wouldn’t need a separate search service or pipeline. Fewer moving parts, right? First architecture: Pure PostgresWell, reality hit hard. I ran into all sorts of quirks. For example, the built-in unaccent function (to strip accents for diacritic-insensitive search) isn’t marked as immutable in Postgres, which means you can’t use it in index expressions without jumping through hoops. Many other rough edges cropped up turning what I hoped would be an “out-of-the-box” solution into wasted time reading PG docs and StackOverflow. In the end, I did get a Postgres-based search working, but the relevance of the results just wasn’t great. Perhaps one reason is that vanilla Postgres full-text search doesn’t use modern ranking algorithms like BM25 for relevance scoring, its default ts_rank is a more primitive approach. This was 2 years ago, so it would be worth trying again though, since ParadeDB’s new pg_search looks very promising! Second attempts: Typesense Having been humbled by Postgres, my next move was to try Typesense, a modern open-source search engine that brands itself as a simpler alternative to heavyweight systems like ElasticSearch. Within a couple of hours of playing with Typesense, I had an index up and running. It pretty much worked out of the box. All the features I wanted were supported with straightforward configuration and the developer experience was night-and-day compared to wrestling with Postgres. Second architecture with TypesenseTo integrate Typesense, I did have to modify the pipeline so that whenever a story/feed/post was created or updated in Postgres, it would also be upserted into the Typesense index (and deleted from the index if removed from the DB). But this was pretty manageable, far easier than dealing with Postgres. Sometimes we obsess with simple architectures that end up being harder to deal with. Simple ≠ easy. At this point, I had a solid server-side search solution. However, it wasn’t local. Searches still had to hit my server. This meant no offline search, and even though Typesense is fast, it couldn’t match the latency of having the data on device. For Fika’s use case (quickly pulling up an article on a phone while offline or on a spotty connection) I wanted to push further. It was time to bring the search engine, into the browser. <suspense music> Third attempt: Local-first with Orama If you’ve ever looked into client-side search libraries, you might have noticed the landscape hasn’t changed much in the last decade. We have classics like Lunr.js or Elasticlunr, but many are unmaintained or not designed for the volumes of data I was dealing with. Then I came across a relatively new, shiny project called Orama. Orama is an in-memory search engine written in TypeScript that runs entirely in the browser. The project supports full-text search, vector search, and even hybrid search. It sounded almost too good to be true, and despite the landing page not being ugly (always a bad sign), I decided to give it a shot. Third architecture: Local-first oramaTo my surprise, Orama delivered on a lot of its promises. Setting it up was straightforward, and it indeed supported everything I needed. I was able to index my content and perform both keyword searches locally. This was pretty mind-blowing: I had Elasticsearch-like capabilities running in the browser, on my own data, with no server round-trip. Awesome. But, and they say everything that comes before a “but” is bullshit. There were new challenges. The first issue was data sync and storage. To get all my ~10k entities into the Orama index, I needed to feed their text content to the browser. Fika uses Replicache to sync data, but originally I wasn’t syncing full bodies of articles to the client (just titles and metadata). Turns out that storing tons of large text blobs in Replicache’s IndexedDB store can slow things down. Replicache is optimized for lots of small key/value pairs and shoving entire 10k-character documents into it pushes it beyond its sweet spot (the Replicache docs suggest to keep key-value size under 1MB). To work around this, I adopted a bit of a hack: I spun up a second Replicache instance dedicated to syncing just the indexable content (the text of each story/feed/post). This ran in parallel with the main Replicache (which handled metadata, etc.) on a Web Worker. With this separation, I could keep most of the app snappy, and only the search-specific data sync would churn through large text blobs. It worked… sort of. The app’s performance improved, but having two replication streams increased the chances of transaction conflicts. Replicache’s sync, because of it’s stateful nature, demands a strong DB isolation level, so more concurrent data meant more retries on the push/pull endpoints. In other words, I achieved local search at the expense of a more complex and somewhat more brittle syncing setup. I told you the DX gets more complicated at the limit! And we are just getting started. I also planned to add vector search on the frontend to complement Orama’s keyword search. First I tried using transformers to run embedding models in the browser and doing all semantic indexing on-device. But an issue with Vite would force the user to re-download the ML model on every page load, which made that approach infeasible. This issue has been solved now, so it remains an experiment to try again. Later I tried generating the embeddings on the server and syncing them to the client, but the napkin math was not napkin mathing: With a context length of 512 tokens, most documents (~10k chars each) would need at least 5 chunks to cover their content (10,000 chars / ~4 chars per token ≈ 2,500 tokens, which is ~5 × 512-token chunks). That means ~5 embedding vectors per document. For ~10k documents, that’s on the order of 50k vectors. If each embedding is 768 dimensions (a common size for BERT-like models), that’s 38.4 million floats in total. 38.4M floats, at 4 bytes each (32-bit float), would be ~150 MB of raw numeric data to store/transmit. And because we use JSON for transport, it would encode these floats as text. The actual payload would balloon to somewhere between ~384–500 MB of JSON 😱 (each float turned into a string with quotes/commas overhead, e.g. "0.1234,"). That’s a lot to sync, store, and keep in memory. Could we quantize the vectors or use a smaller embedding model? Sure, a bit: for example, Snowflake’s Arctic Embed XS model has 384-dimensional vectors, which would reduce the size. But we’d still be talking hundreds of MB of data. And the more we started optimizing for size (bigger context lengths, aggressive quantization, etc.), the more the semantic search quality would degrade. So, I decide to yank the wax stripe and toss the hairy dream of local semantic search straight into the trash. In fact, to really convince myself, I ran two versions of Fika for a while: one version used my earlier server-side hybrid search (Typesense with both keywords and vectors), and another used local-only keyword search (Orama). After a couple of weeks of using both, I came to a counterintuitive conclusion: the purely keyword-based local search was actually more useful. The hybrid semantic search was, in theory, finding related content via embeddings, but in practice it often lead to noisier results. As I was tuning the system, I found myself giving more and more weight to the keyword matches. Perhaps it’s just how I search. I tend to remember pointers to the things I look for and rarely look for abstract terms. I could have bolted on a re-ranker to refine the results, but that would be a hassle to implement locally. And more importantly, the hybrid results sometimes failed the “why on earth did this result show up?” test. For example: Looking for a recipe I typed “bread” and I got an AI paper with nothing highlighted… oh wait, I see... there’s a paragraph in this AI paper that mentions croissants. Are croissants semantically related to bread? Opaque semantic matches can sabotage user trust in search results. In a keyword search, if I search a keyword, I either get results that contain those words or I don’t. It’s clear. With semantic search, you sometimes get results that are “kind of” related to your query, but without any obvious indication why. That can be frustrating. So I ultimately dropped the hybrid approach and went all-in on Orama for keyword search. Immediately, the results felt more focused and relevant to what I was actually looking for. Bonus: the solution was cheaper (no need to generate embeddings) and simpler to operate in production. However, I haven’t addressed the elephant in the room yet: Orama is an in-memory engine. Remember that ~100 MB of text? To index that, Orama was building up its own internal data structures in memory, which for full-text search can easily be 2–3× the size of the raw text. I was personally allocating ~300 MB of RAM in the browser just for the search index. That’s ~300 MB of the user’s memory just in case they perform a search during that session, and many sessions, they might not. What a waste. That’s far from good engineering. But we are not done with the bad news. On the server, you can buy bigger machines with predictable resources that match your workload. But with local-first, you have to deal with the device of your customer: For low-end devices (phones) the worse was not the memory overhead but the index build time. Every time the app loaded, I’d have to take all those documents out of IndexedDB and feed them into Orama to (re)construct the search index in memory. I offloaded this work to a Web Worker thread so it didn’t block the UI, but on a better-than-average mobile phone (my Google Pixel 6), this indexing process was taking on the order of 9 seconds. Think about that: if you open the app fresh on your phone and want to search for something right away, you’d be waiting ~9 seconds before the search could return anything. That’s worse than a cloud-based approach, and not an acceptable trade-off. I tried to mitigate this by using Orama’s data persistence plugin, which lets you save a prebuilt index to disk and load it back later. Unfortunately, this plugin uses a pretty naive approach (essentially serializing the entire in-memory index to a JSON blob). Restoring that still took on the order of seconds, and it also created a huge file on disk. I realized that what I really needed was a disk-based index, where searches could be served by reading just the relevant portions into memory on demand (kind of like how SQLite or Lucene operate under the hood). Last and final solution: FlexSearch As if someone was listening my frustrations, around March of 2025 an update to the FlexSearch library was released adding support for persistent indexes backed by IndexedDB. In theory, this gave exactly what I wanted: the index lives on disk (so app reloads don’t require full re-indexing), and memory is used only as needed to perform a search (and in an efficient, paging-friendly way). I jumped on this immediately. The library’s documentation was thorough, and the API was fairly straightforward to integrate. I basically replaced the Orama indexing code with FlexSearch, configuring it to use IndexedDB for persistence. The difference was night and day: On a cold start (new device with no index yet), the indexing process would incrementally build the on-disk index. This initial ingestion is still a bit heavy (it might take few minutes to pull all the data and index it), but it’s a one-time cost per device. On subsequent app loads, the search index is already on disk and doesn’t need to be rebuilt in memory. A search query will lazily load the necessary portions of the index from IndexedDB. The perceived search latency is now effectively zero once that initial indexing is done. Even on low-end mobile devices, searches are near-instant because all the heavy lifting was done ahead of time. Memory usage is drastically lower. Instead of holding hundreds of MBs in RAM for an index that might not even be used, the index now stays in IndexedDB until it’s needed. Typical searches only touch a small fraction of the data, so the runtime memory overhead is minimal. (If a user never searches in a session, the index stays on disk and doesn’t bloat memory at all.) At this point, I was also able to simplify my syncing strategy. I no longer needed that second Replicache instance continuously syncing full document contents in the background. Since the FlexSearch index persists between sessions, I could handle updates in an incremental way: I set up a lightweight diffing mechanism using Replicache’s experimentalWatch API. Essentially, whenever Replicache applies new mutations from the server, I get a list of changed document IDs (created/updated/deleted). I compare those IDs to what’s already indexed in FlexSearch. The difference tells me which documents I need to add to the index, which to update, and which to remove. Then, for any new or changed documents, I fetch just those documents’ content (lazily, via an API call to get the full text) and feed them into the FlexSearch index. This acts as an incremental ingestion pipeline in the browser. On a brand new device, it will detect that “no documents are indexed” and then start pulling content in batches until the index is fully built. After that, updates are very small and fast. This approach turned out to be surprisingly robust and easy to implement. By removing the second Replicache instance and doing on-demand content fetches, I reduced a lot of the database serialization conflicts. And because the index persists, even if the app crashes mid-indexing, we can resume where we left off next time. The end result is that search on Fika is now truly local-first: it works offline, it has essentially zero latency, and it returns very relevant results. The cost is that initial ingestion time on a new device and some added complexity in keeping the index in sync. But I’m comfortable with that trade-off. Conclusion As I mentioned at the beginning, the developer experience of building local-first software becomes more challenging as you push for more complex, data-heavy features to the client side. Most of the time, you can’t YOLO it. You have to do the math and account for the bytes and processing time on users’ devices (which you don’t control). In a cloud-based architecture you should also care about efficiency, but traditionally many web apps get away with sending fairly small amounts of data for each screen, so developers are less forced to think about, say, how many bytes a float32 in JSON takes up. In the end, I would dismiss DX claims and only recommend building local-first software if the benefits align with what you need: It resonates with your values. If you care about data ownership and the idea that an app should keep working even if its company go on an “incredible journey”. Your business model revolves around “backing up your data” instead of “lending your data”. The performance characteristics match your use case. Local-first often means paying a cost up front (initial data sync/download, indexing, etc.) in exchange for zero-latency interactions thereafter. Apps that have short sessions need to optimize for fast initial load times. But apps that have long sessions and a lot of interactions, benefit from local-first performance characteristics. You need the out-of-the-box features it enables. Real-time collaborative editing, offline availability, and seamless sync are basically “free” with the right local-first frameworks. Retrofitting those features onto a cloud-first app is extremely difficult. If none of the above are particularly important for your project, I’d say you can safely stick to a more traditional cloud-centric architecture. Stateless will always be easier than stateful. Local-first is not a silver bullet for all apps, and as I hope my story illustrates, it comes with its own very real trade-offs and complexities. But in cases where it fits, it’s incredibly rewarding to see an app that feels as fast as a native app, works with or without internet, and keeps user data under the user’s control. Just get your calculators ready, and maybe a good supply of coffee for those long debugging sessions. Good luck, and happy crafting!

- local-first

- search

- +1

-

AI is eating the Internet

Jul 28 ⎯ “You see? Another <something> ad. We were just talking about this yesterday! How can you be so sure they’re not listening to us?” – My wife, at least once a week. Internet advertising has gotten so good, it’s spooky. We worry about how much “they” know about us, but in exchange, we got something future generations may not: free content and services, and a mostly open Internet. It is unprecedented Faustian bargain, one that is now collapsing. A Memory of Summer: The Ad-Based Utopia At the epicenter of the modern Internet sits Google. Forget the East India Company, Google, with an absurd +$100B in net income, is arguably the most successful business in history. By commanding nearly 70% of the global browser market and 89% of the search engine market, they dominated Internet through sheer reach. How did this happen? A delicate balance of incentives where every player on the Internet got exactly what they wanted: Consumers enjoyed free access to virtually unlimited information and online services. Content creators got a steady stream of visitors flowing from search engines, which they could monetize via ads or conversions. Advertisers gained demand for their products at a reasonable price, thanks to unprecedented targeting precision. Google crawled the entire Internet, and site owners didn’t just allow it: they optimized for it. Users found what they wanted (most of the time, for free), while advertisers happily footed the bill because digital ad targeting finally began to solve the old John Wanamaker dilemma: “Half the money I spend on advertising is wasted; the trouble is I don't know which half.” Later on, Meta (then Facebook) doubled down on the same ad-driven premise. The social graph plus cross‑device identity made its targeting scary good. And advertisers flooded in. Google saw the threat but Zuck went full Carthago delenda est. By the late 2010s, the Google-Facebook duopoly ran the ad‑funded Internet: Google caught “I’m looking for it” intent; Meta created “make me want it” demand, and both printed money. Winds of Winter: The Transformer revolution Even the mightiest empires fall, and Google unknowingly sowed the seeds of its own undoing. In 2017, Google researchers published “Attention is all you need,” introducing the Transformer architecture. Originally meant to improve translation services, this innovation also led to enhance search quality with models like BERT. When OpenAI’s ChatGPT launched (as a free research demo in late 2022), it spread like wildfire, reaching 100 million users in just two months. The fastest adoption of any consumer application in history. Suddenly, millions of people started replacing the search box with a chat box. Why type a query and sift through ten blue links (from which 2-5 are ads) when an AI could synthesize an answer for you? The Internet’s longstanding equilibrium began to topple. Today’s landscape is one of turmoil, change, excitement, and fear. Content is no longer traded for traffic; content has become training data. And data is the fossil fuel of AI. This shift has unleashed a wave of new players crawling the web, often offering nothing in return. Much like early Google News aggregating snippets (which infuriated publishers), AI companies now repurpose the content they ingest and serve it directly in chat responses or summaries, without sending users to the original source. It’s breaking the ad-based Faustian bargain. Content creators, realizing their work is being pillaged instead of traded, are rapidly fencing off their gardens. Paywalls are rising higher than ever, and anti-bot measures are getting more aggressive. In an ironic twist, human users often get caught in the crossfire of bot hunting Inquisition (CAPTCHAs, login walls, broken RSS feeds), as sites desperately try to distinguish real readers from scraping bots. The once-open web is curling back into a fragmented, medieval patchwork of walled cities. Walled cities against the botsEven Google, realizing its core search business is at risk, has decided to commit seppuku rather than be usurped by outsiders. Google is now rolling out AI-generated answers in search results, essentially cannibalizing its own traffic. “Sure, Search might die, but I’d rather kill it myself first.” Like the dolphins in The Hitchhiker’s Guide to the Galaxy, they’re waving as they swim away: “So long, and thanks for all the crawled content.”. Early evidence from Google’s experiment is sobering: when Google shows AI answers, organic results suffer a ~50% drop in clicks. In other words, content creators are getting drastically fewer visitors because Google’s AI is answering the query directly, and advertisers, seeing organic reach dry up, have little choice but to pour more money into ads for visibility. Turns out the obituaries were premature: Google ad revenue is up 10%. Meanwhile, Meta has been fighting its own wars. Apple’s App Tracking Transparency (ATT) measures dealt a heavy blow to Meta’s ad targeting in 2022, an estimated %37 decrease on click-through rates. Zuckerberg’s costly bet on the Metaverse didn’t pan out, and the core ad-based model remains under threat. Zuck is now in the arena, waving an American flag while fighting everyone with his Open Source AI strategy. But the Chinese labs are playing the same game, and despite GPU bans, they are taking over the benchmarks. And then there’s Cloudflare, quietly rubbing its hands like an old-school gangster offering protection. “Your content is safe with us,” they say, while simultaneously launching browser automation to those with crawling needs. In July 2025, Cloudflare announced a new service to block AI crawlers by default unless they pay up or get permission. It’s offering a potentially lucrative third way for the Internet’s future. It’s not a classic paywall. It’s not ads. It’s essentially “Pay to Crawl”: think of it as the digital equivalent of a nightclub’s ladies’ night: humans get in free, bots pay cover. Reddit, Stack Overflow, and major news publishers are already striking data licensing deals, turning this data monetization model into reality. In effect, if data is fossil fuel, the web’s knowledge is being fracked at all costs. Lastly, as generative bots overrun the public web, humans are retreating to more intimate corners. The cozy web of private chats, invite-only forums, and niche communities. When every other social post or blog could be written by ChatGPT, people naturally gravitate to spaces where real identity and authenticity are ensured. It’s the chess problem: robots may play perfectly, but nobody shows up to watch robots play each other. This has lead to a resurgence of email newsletters, influencers, small Discord communities, and Substacks where a named human is accountable for the content. robots dominate the open webA Dream of Spring: The Internet to come Eventually, the dust will settle. Some players will win (and take all), others will fall. But a new equilibrium must emerge. One that rebalances the interests of content creators, consumers, and advertisers. I could stop here and call it a day. But if you’ve made it this far, you deserve more than an open‑ended conclusion. The past and present are easy. An LLM can already write them better than I can. The future? That’s exposure. I’ll make the call and own it if I’m wrong. Looking ahead, the collapsing ad-funded, crawl-for-traffic model seems likely to resolve into three intertwined markets: Ad-based Consumer AI: Whether it’s an AI chatbot, personal assistant, or generative search hybrid, the winner of the consumer AI category will become a new Super-Aggregator. They’ll replace search (and perhaps even browsers, which every company seems to be building) as the gateway through which billions access information and services. And they will monetize primarily via advertising. Why ads? Again? Because it’s too much money for anyone to leave on the table. In 2024, Google made ~$265B from ads. To match that with subscriptions alone would be herculean: it’d take roughly 13 billion monthly $20 subscriptions. That’s 5 billion short of Earth’s population, and demographic collapse and virtual waifus won’t save that math. The business logic points toward a free (or low-cost) consumer AI service with a massive user base, monetized by targeted advertising, just as search and social networks were. The Open Cozy web: Trust in faceless content and large media will erode. When any article might be AI-generated slop, people will increasingly trust individuals and communities instead. There will be just too much content to be able to sift the signal from the noise. Influencers, domain experts, and friend networks will become the sources people rely on more than generic brands. We’re already seeing the shift: readers flock to personal Substack newsletters from writers they trust, and niche, closed communities thrive while broad public forums struggle with bot moderation. In this future, human provenance is the key problem to solve. We’ll need individual “fingerprints” to verify that specific humans created specific pieces of content. Once we can reliably prove and label human‑made work, I’m optimistic that the pendulum will swing back from centralized, walled platforms to a constellation of decentralized, open spaces. Pay for Crawl: Quality human-generated data will become scarce and more valuable, especially as the Internet’s data gets increasingly contaminated by AI output. Models are already being trained on AI-generated content, and like royal inbreeding, the results aren’t looking good. Pay‑per‑Crawl will displace content‑licensing. Licensing builds walls: everything behind a fence, more logins, more friction, less traffic. Pay‑per‑Crawl strikes the perfect balance: humans can roam freely, but bots pay at the gate. Perhaps browsers will carry a kind of “human token” to prove you’re not a bot (a modern twist on HTCPCP). The open web won’t die, but it’s likely to have ID checks at the gate. robots showing ID at the gateWho will be the consumer AI winner? Two frontrunners stand out to me, for very different reasons: OpenAI: They currently have the strongest consumer AI brand. Despite the clunky name, “ChatGPT” has become synonymous with AI for hundreds of millions of people. OpenAI’s move to integrate web browsing into ChatGPT (with source citations and clickable results) is a clever attempt to repair relations with content creators by sending traffic back. But to truly reach Google-like gargantuan revenues, they would need to introduce advertising or sponsored results. OpenAI’s partnership with Microsoft (which brings Bing’s search index and advertising platform into the mix) hints that ads could be coming. If OpenAI can leverage its head start in user base, but it faces the challenge of scaling revenues without alienating users: not everyone will tolerate ads in their assistant. Google: Don’t leave out the current king. Google has decades of experience integrating ads in a way users tolerate, and it already has relationships with millions of advertisers. It also still controls Android, Chrome, Gmail, Maps, YouTube, and other personal platforms that give it a ridiculous data and distribution advantage. While Google was caught unprepared by the launch of ChatGPT, by 2025 it launched capable large models of its own (Gemini, Imagen, Veo, etc.), demonstrating serious technical muscle. Critically, Google doesn’t have to justify a sky-high startup valuation: it’s immensely profitable already and can afford to play the long game. This might allow Google to adopt AI more deliberately, balancing the interests of advertisers, content creators and consumers as it transitions. In the end, Google’s biggest advantage is that it controls the default channels (your phone, your browser, your email) and can bake its AI into all of the above. That, combined with a less constrained business model (they won’t mind cannibalizing some revenue to protect their moat), makes Google a formidable contender in the consumer AI game. My bet is on Google pulling through. That’s it, I said it. So, the bargain that ruled the Internet for two decades is ending. The free ride of free content, propped up by unseen ad targeting, is giving way to something new, something perhaps more balanced, but also more fragmented. “Software is eating the world,” Marc Andreessen declared in 2011. Now, “AI is eating the Internet”. The only questions are: who gets to digest the value, and how will the meal be shared? What do you think? What’s your take? The winter winds are howling, but perhaps, just perhaps, a new spring is coming.

- ai

- essay

- +3

-

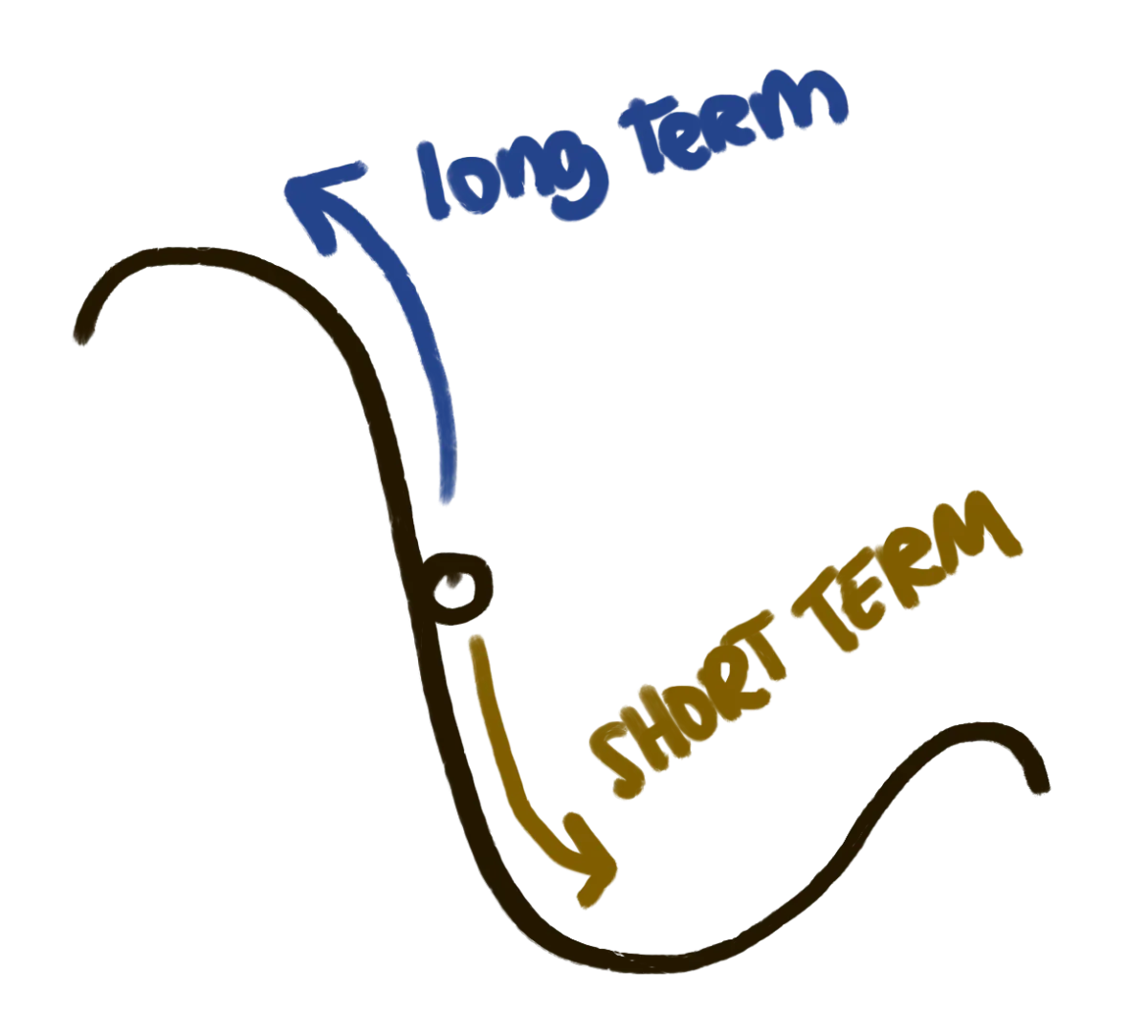

Ruthless prioritization while the dog pees on the floor

Jun 30 ⎯ Great article on prioritization, and the friction that generates when people don’t understand the most important tenet of prioritization: Time is a zero-sum resource: An hour spent on one thing necessarily means not spending an hour on the entire universe of alternative things There are always more things to be done than time to do them. Hence, in order to do The Most Important Thing, we need to say no to everything else (or at least, not yet). There will always people on the organization that will disagree, and in my opinion, the biggest divide is about time horizons. Some people work with shorter time horizons: they are just more aware than not being alive next month is more important than not being alive next year, and an accumulation of failed strategic initiatives have made more cynical. This pisses off long term thinkers. Which feel trapped in a local minimum and feel the organization is constantly chasing opportunities and not being strategic. So, the problem with ruthless prioritization is that no one really knows where that 10x level is. Short term and long term thinkers have different risk profiles and will chase different directions. Great companies do both. Willingly or accidentally, they allocate most resources to short term initiatives, while leaving some percentage to chase long shots. I think this is another form of slack, which is what allows complex organisms to find global maxima.

-

Building a web game in 2025